Three dimensional co-ordinate systems come in two flavors, right-handed and left handed. The naming comes from the old memory aid of using your fingers to sweep the x-axis onto the y-axis, with your thumb indicating the direction of the z-axis. In the gaming world different technologies have made different choices e.g. typically DirectX is left-handed, and OpenGL is right-handed (but each has ways of adapting). Unity has chosen a left-handed co-ordinate system, and Blender a right handed one.

The physics/mathematics world uses right-handed co-ordinates (this enters by the way in which a vector cross product is defined; it uses the right hand rule). Unity’s Vector3 class implements the cross product using the right-handed rule.

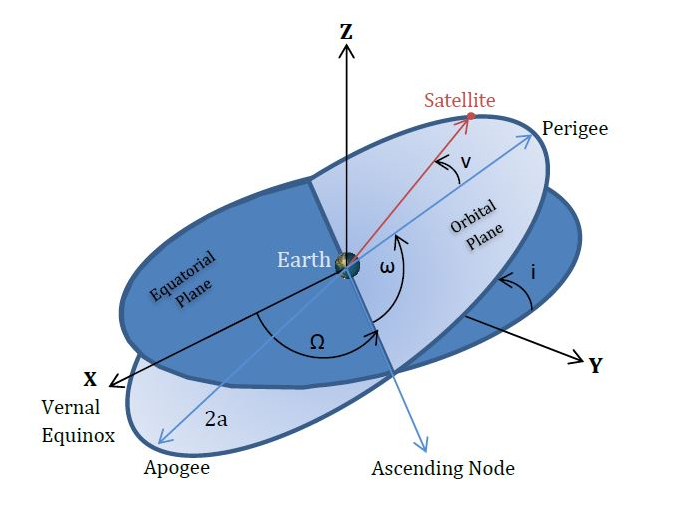

The orbital elements in orbital mechanics are defined with respect to a right handed co-ordinate system as shown in the figure (from www.gsc-europa.eu ).

Viewing Orbits in Left-Handed Co-ordinates

What are the implications of viewing an orbit defined with a RH co-ordinate system in Unity’s LH co-ordinate system?

The orbital elements (a, e, i, Omega,omega, nu) can be grouped into the shape parameters (a, e); orientation parameters (i, Omega, omega) and the “position” in the orbit, nu. The choice of left/right handed viewing co-ordinates does not affect the shape parameters and they do not need to be considered any further.

In an empty Unity 3D scene the default camera position is (0, 1, -10) and the camera is looking towards the origin. A scene with a center object at (0,0,0) and a circular orbit using an OrbitUniversal component the orientation parameters are affected as follows:

- nu: ok (counter clockwise from x-axis)

- Omega: ok (counter clockwise from x-axis)

- omega: ok (counter-clockwise from x-axis)

- inclination: reversed (orbit with positive inclination dips below XY plane for nu = (0, Pi)

The value of Z is correct (it is positive) but in the LH co-ordinate system this renders in the reverse sense and the appearance does not match what a physics textbook would show.

“Fixing” the view

If it is important that the scene view match what is shown in physics books then there are some options, all of which have some corresponding complications. Bear in mind the physics is still doing what is expected and there will be no inaccuracies in the encounters, it is just being viewed in a z-reversed way. It is also worth noting there is no camera rotation/orientation change that alter this. The left-handed and right-handed worlds are related by a reflection across the x-y plane.

One option would be to simply change the sign of the inclination. This will correct the visual appearance of the orbit but changes the internal physics values. This results in all the physics Z values being reversed.

Another option is to change the physics to make the default orbital plane the XZ plane instead of the XY plane. This swap of coordinates ensures that when these orbits are viewed in Unity’s LH coordinate system the orbit nodes appear as expected. This can be done by selecting the “XZ Orbits” toggle in the Gravity Engine inspector. When selected all orbits and orbit predictors will default to placing zero inclination orbits in the XZ plane.

The game logic does now need to take care to distinguish the world co-ordinates from the physics co-ordinates. This is particularly important for any NBody objects that have their position in the physics world taken from their transform (as opposed to being under the control of an orbit class). In this case the physics world will load the value from the transform, and then update it with the the z value reversed.